At Hacker School, fridays are a little different to the rest of the week. Firstly they’re optional, and secondly rather than work on your usual projects its typical to do interview preparation.

These consist of mock interviews, fun with recursion, and coding challenges. One such challenge is to “Create a URL shortener in under 2 hours” which has become quite popular recently.

When faced with this challenge, I opted for Ruby with the Sinatra web framework backed by MySQL as I am pretty familiar with them, and was confident that I could complete the challenge within the time constraints.

Indeed 1 hour 54 minutes into the challenge (including a break for lunch!) I had a functioning system and spent the remaining few minutes tidying up my code. At the demo/review session it worked (phew!). What would normally have happened is we’d each return to our own projects and never look at that code again.

However a conversation at the end of the review sparked my interest – that of how useful our freshly written services would be in the real world – would they scale? So I decided the following friday to embark upon some performance testing and optimisation of the service I built.

My goal was to focus on the low hanging fruit which are available to make a web service perform significantly better, and was able to take it from initially under 15 requests/second to over 1000 requests/second on the same hardware!

I decided to focus on the URL lookup phase – taking the short code as an input, and responding with a 302, because for a URL shortening service, this will make up for the vast majority of requests. I preloaded the service with 10,000 short codes.

For testing I used JMeter, as I was familiar with it and my requirements were pretty simple – I needed to hit the endpoint with data fed from some kind of source and ramp up load to find the point where response times begin to rise and request rate plateaus.

I started out testing on my Laptop (2013 Macbook Retina 13″), and the maximum request rate I could get out of it was 14.6 requests/second. This translates to around 50,000 requests per hour – not too bad, but really I’d been hoping for something better.

CPU usage seemed quite high, so I used `procsystime` – a frontend to dTrace to look into what was going on during a load test, and spotted that 99% of the CPU time for the program was spent forking

CPU Times for PID 7834,

SYSCALL TIME (ns)

psynch_cvclrprepost 1262

madvise 25283

close_nocancel 39823946

fork 17151822963

TOTAL: 17335516977

I did some digging on this, and discovered that when Sinatra is run under Shotgun, it restarts upon every request – this is great in a development environment as it means your latest changes are always live – however in an environment requiring performance, its not ideal.

Before making any changes, I moved the running of the app to a pair of c3.2xlarge instances running Ubuntu 14.04 in Amazon AWS. The reason for this was two fold – I wanted to separate out the duties from load injection and app server into individual servers and additionally I wanted to ensure that background processes on my laptop didn’t adversely affect results.

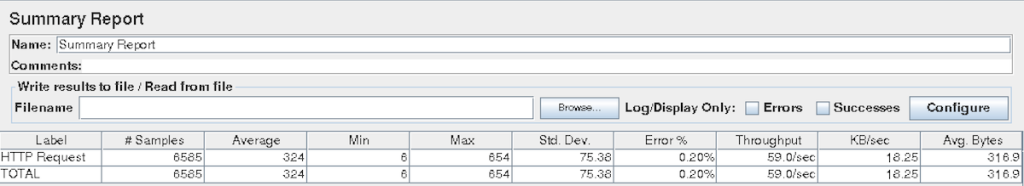

I re-ran the previous load test on the new host with no other changes and recorded results of 59 requests/second. This was somewhat reassuring as with the c3.2xlarge instance having four times the number of cores of my laptop, it meant that the app scaled near perfectly in line with the increase in available CPU capacity.

I re-ran the previous load test on the new host with no other changes and recorded results of 59 requests/second. This was somewhat reassuring as with the c3.2xlarge instance having four times the number of cores of my laptop, it meant that the app scaled near perfectly in line with the increase in available CPU capacity.

Switching the app to run just with the syntax `ruby urlshortener.rb` and re-running the load test saw a significant performance improvement, with 515 requests/second being served, or about 1.8M/hour.

This was somewhat satisfactory, but I wanted more. I thought I’d take a look at the datastore I was using.

mysql> SET profiling = 1;

Query OK, 0 rows affected, 1 warning (0.00 sec)

mysql> SELECT url FROM urls WHERE shortcode='3pxred';

+-------------------+

| url |

+-------------------+

| http://bash.sh?96 |

+-------------------+

1 row in set (0.01 sec)

mysql> SHOW PROFILES;

+----------+------------+-------------------------------------------------+

| Query_ID | Duration | Query |

+----------+------------+-------------------------------------------------+

| 1 | 0.00870700 | select url from urls where shortcode='3pxred' |

+----------+------------+-------------------------------------------------+

1 row in set, 1 warning (0.00 sec)

So 8.7ms of time to return the url. I did this profiling over a significant number of queries, and it proved fairly typical. ~9ms is quick, but I wondered if it could be improved upon.

Keeping in mind my goal here is pull off low hanging fruit and not spend a lot of time optimising one area I quickly re-worked my service to use Redis as a backend. Redis is a key value store, rather than a general purpose database like MySQL and in this case I would be storing Keys (Shortcodes) and Values (URLs) so it was like an ideal use case.

Re-running the load test, and monitoring the redis instance for latency I saw that typical latency is now 0.3ms, so better than an order of magnitude improvement. More importantly the load test was now running at over 620 requests/second.

$ redis-cli --latency-history

min: 0, max: 3, avg: 0.30 (1369 samples) -- 15.01 seconds range

min: 0, max: 4, avg: 0.30 (1372 samples) -- 15.00 seconds range

min: 0, max: 5, avg: 0.28 (1376 samples) -- 15.01 seconds range

There was another reason for swapping out to Redis here – I was curious as to whether the performance would differ running under jruby as opposed to the native binary. With MySQL, I would need to port my queries over to use the MySQL JDBC driver, rather than the mysql2 gem I had been using, and it was definitely less effort to just use Redis.

In many ways, a simple web app which is just serving requests should be an ideal case for jRuby, as the JVM provides an very performant, well threaded runtime. Thankfully it doesn’t disappoint with the initial run generating over 2500 requests/second yielding a further 400% improvement in performance.

Probably this would have been a satisfactory outcome, but one of the wonderful things about the JVM is the rich diagnostic information that is available at your finger tips.

Since JDK 1.7.0_40 JavaFlightRecorder has been bundled with the Oracle HotSpot JVM. It exposes a huge number of performance metrics from within the JVM with minimal (<2%) performance overhead. FlightRecorder is an enhancement of a prior product formerly offered with BEA JRockit, and its now availability for HotSpot is part of Oracle’s long term strategy of one JVM built around HotSpot

For production use, you need to purchase a commercial JVM license from Oracle, but as explained here they are “freely available for download for development and evaluation purposes as part of JDK 7u40, but require the Oracle Java SE Advanced license for production use under the Oracle Binary Code License Agreement”

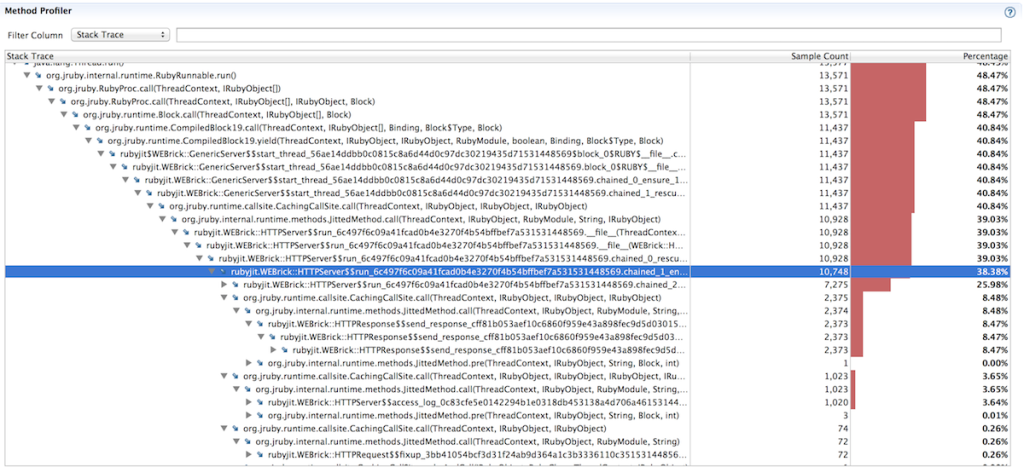

I tested my app with Flight Recorder, and then loaded the data in its sister application – Java Mission Control. It’s an incredibly powerful tool, so if you’ve not used it before, I would recommend watching some of the YouTube Videos that Oracle have made – here

Jumping into the method profiler, I can see that a lot of the time is being spent in WEBrick – the default HTTP server that Sinatra runs under.

From looking at the JRuby servers page I saw that many alternatives exist as drop in replacements, and opted to try three of them:

- trinidad – which is built around Apache Tomcat

- mizuno – which is built around Jetty

- puma – which is written in ruby, but designed for high performance

For trinidad and puma, it was just a case of installing the gem, and re-starting the app. For mizuno I needed to generate a rack configfile to spawn it. I ran each with their default configurations and didn’t make any attempt to optimise them, because the focus for this exercise was to look to make big steps with little effort.

Each made an improvement – Trinidad boosted performance from ~2500 requests/second to just over ~3000 requests/second. Mizuno took this a bit further, and took it up to 3400 requests/second.

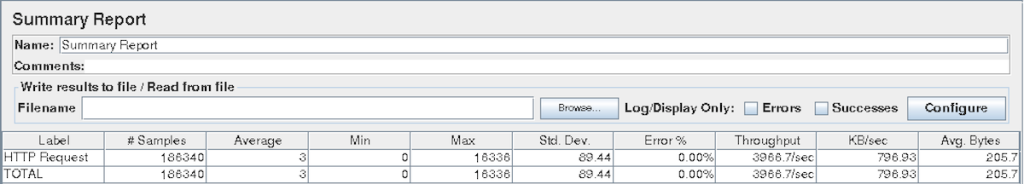

Puma though was a revelation for my use case, taking performance up to just shy of 4000 requests/second, and mean response times being in the order of 3ms.

For completeness I then re-ran the tests on my own laptop, and recorded 1022 requests/second on there.

For completeness I then re-ran the tests on my own laptop, and recorded 1022 requests/second on there.

So the end result – for now – is capacity improvement of over 6500% on both platform sizes, which I’m reasonably happy with. This was combined with a latency improvement from over 300ms to just 3ms per call, which is far more acceptable.

It’s rare to be able to make such big gains in such a few steps, but this post serves to highlight the benefit of understanding the strengths and weaknesses of the tools you’re using and the environment you’re in. Those which work well in a development environment – like Shotgun – can prove detrimental to aspirations of performance, and similarly jRuby works really well for a long running service, but the Java initialisation time could make it frustrating for a rapidly changing development environment or for short running scripts.

In a future post I will explore the more intensive way of optimising performance – taking an application which is already running in a fairly performant manner, and look to eek out additional performance through profiling and identifying the right configuration tweaks and changes to make.